Jake Quilty-Dunn

Faculty of Philosophy

University of Oxford

1. Introduction

The question of whether perception is “informationally encapsulated” from cognition (Fodor 1983) has dominated the study of perception in the past decade. The issue is debated amongst psychologists (e.g., Lupyan 2015; Firestone & Scholl 2016) as well as philosophers (e.g., Prinz 2006, ms; Macpherson 2012; Siegel 2012; Clark 2013; Mole 2015; Block ms). Much of the disagreement involves differing interpretations of specific studies, often focused on nitty-gritty details of experimental design (e.g., Durgin et al. 2009; Proffitt 2009; Gantman & van Bavel 2014; Firestone & Scholl 2014). A significant amount of disagreement, however, concerns a priori disputes about which mental processes constitute violations of encapsulation.

A major point of contention concerns the role of attention. Some theorists argue that effects of cognition on perception that are mediated by certain forms of attention are compatible with encapsulation (Deroy 2013; Firestone & Scholl 2016). Others write that attention is a vehicle for widespread, systematic violations of encapsulation (Mole 2015; Lupyan 2016; Prinz ms). Still others occupy intermediate positions (e.g., Macpherson 2012). I’ll argue for an extreme thesis: no effect of attention, either on the inputs to perceptual processing or on perceptual processing itself, constitutes a violation of the encapsulation of perception. The upshot of this thesis is not only that all effects mediated by attention are compatible with encapsulation, but also that, contra current practice, debates about the encapsulation of perceptual processing should be separated from debates about the theory-ladenness and normative force of perception.

[expand title=”Target Presentation by Jake Quilty-Dunn” elwraptag=”p”]

2. Encapsulation

Encapsulation is a matter of information access. Jerry Fodor writes that an encapsulated system “does not have access to all of the information that the organism internally represents; there are restrictions upon the allocation of internally represented information to input processes” (1983, 69; see also Scholl 1997). Saying that System A is encapsulated from System B means that A is constrained (in some way to be spelled out later) from accessing information in B. The notion of information access is foundational to the computational theory of mind. Every computational system must have some information available to it. Computational systems transform inputs into outputs, and what determines the relevant input-output mappings is the information available to the system together with the algorithms it implements. Part of what distinguishes computational processes from mere transductions is that the former do, and the latter don’t, bring stored information to bear on their computational transformations such that the output is not merely a slavish registration of the input (Pylyshyn 1984, Chapter 6).

As an illustrative example, take visual “shape from shading,” a process that uses information about the shading of an object’s surface to derive its three-dimensional shape (Ramachandran 1988). The system that implements this process seems to “assume” that light comes from above and slightly to the left (Sun & Perona 1998). Somehow, the information that light comes from above and to the left guides the mapping from shaded two-dimensional surfaces to three-dimensional shapes performed by the shape-from-shading system. Talk of the system making an “assumption” is a metaphorical way of stating that a certain piece of information structures the relevant computations.

This structuring can take place either explicitly or implicitly. In the explicit case, a representation with the content <light comes from above and slightly to the left>—or, more likely, some much more precise specification—is stored in a manner that makes it accessible to the shape-from-shading system. When the system is fed its inputs, it accesses this representation (and other stored information) and derives its outputs in accordance with whatever algorithms it implements. The notion of access involves the activation of a token representation and its functioning directly and without independent intervention in the relevant processes.

In the implicit case, on the other hand, there’s no such representation stored anywhere. Instead, the algorithms implemented by the system accord with the principle that light comes from above and to the left. That information isn’t available for use by any process; it’s merely implicit (or “embodied”—e.g., Devitt 2006, 45) in the operations of the system (Shea 2014). For this piece of information to be “accessed” is simply for it accurately to describe the transformations demanded by the relevant algorithms.

Note that ‘explicit’ in this context does not mean ‘conscious’. Some (e.g., Fodor 1981) argue that rules of grammar are explicitly stored in the language faculty and are accessed in deriving the syntactic properties of linguistic expressions, but would nonetheless deny that these representations are ever conscious. The present use of ‘explicit’ thus differs from the use of that term in discussions of implicit vs. explicit attitudes, such as the distinction between implicit biases and conscious attitudes. On a view like Mandelbaum’s (2016), for instance, implicit biases are explicit in the sense that they are realized by mental representations that are directly available for use in various mental processes, and are implicit only in the (very different) sense that they are unconscious.[1]

We can loosely use the term ‘store of information’ to describe both the explicitly and implicitly stored information used by a system to structure its input-output mappings. This term is loose in that implicitly stored information, unlike explicitly stored information, is not literally stored anywhere. Similarly, implicitly stored information, unlike explicitly stored information, is not literally accessed while running computations within the relevant system. Nonetheless, it’s part of the set of information that shapes the processing of a given system, and can thus be counted as part of its store of information.

It’s useful to have a neutral way of talking given how routinely difficult it is to determine whether a piece of information is stored explicitly or implicitly. The case of light coming from above and to the left demonstrates this methodological problem. Is that information an explicit or implicit part of shape-from-shading processing? This is not a rhetorical question, but it’s difficult to know how to go about answering it (though the malleability of the light-from-above assumption in response to feedback from other modalities might suggest explicit storage—see Adams et al. 2004). Another illustrative case is generative grammar (Chomsky 1986; Devitt 2006; Pietroski 2008). Critics notwithstanding, generative linguists believe that the rules of grammar are part of the language faculty’s proprietary store of information. There is, however, no consensus on whether this information is explicitly stored.

With all this in the background, we can give a more precise characterization of encapsulation: System A is encapsulated from System B when A has a proprietary store of information that excludes information stored in B. While operating on its inputs to produce its outputs, A can only access a certain limited domain of information, and a piece of information held in B—even if it’s semantically highly relevant to the processing in A—simply fails to fall within that domain, and thus cannot be accessed by A.

The following passage from Fodor usefully illustrates this point:

One can conceptualize a module as a special-purpose computer with a proprietary database, under the conditions that: (a) the operations that it performs have access only to the information in its database (together, of course, with specifications of currently impinging proximal stimulations); and (b) at least some information that is available to at least some cognitive process is not available to the module. (Fodor 1985, 3)

Encapsulation in this sense underwrites the modularist explanation of recalcitrant perceptual illusions: despite the fact that a piece of information in cognition is semantically relevant to the illusory percept (e.g., one knows that the stimulus is illusory), that information falls outside the proprietary store of information available to perception, and thus the illusion persists.

A primary reason encapsulation matters for cognitive science is that it provides a good reason for positing distinct systems. If two processes occur in the same system, then semantically relevant information that is accessible to one process should typically be accessible to the other as well. For example, guessing where a person is from and deciding whether to ask them for directions are distinct cognitive processes, but a single piece information (e.g., that they only speak Finnish) can be relevant to both processes. In this example, when told that the person speaks Finnish you might form only a single representation, that person only speaks finnish, which is stored in central cognition and is accessible to both processes (e.g., it might lead you to guess that they are from Finland and, if you don’t speak Finnish, to decide not to ask them for directions). This sort of case would provide a compelling reason to think that the processes of guessing where a person is from and deciding whether to ask them for directions both take place in the same central-cognitive system.

If, on the other hand, two processes cannot access the same store of information, then there is good reason to think they don’t take place in the same system. It’s for this reason that Firestone and Scholl, for example, take the idea of widespread violations of the encapsulation of perception from cognition to threaten the idea of “a salient ‘joint’ between perception and cognition” (2016, 3). Some theorists on the other side of the debate agree. Clark, for example, writes that the putative prevalence of cognitive penetration “makes the lines between perception and cognition fuzzy, perhaps even vanishing” (2013, 190). Others, such as Block (ms), argue that cognitive penetration is widespread but that perception is nonetheless distinct from cognition, appealing to factors like differences in representational format (cf. Quilty-Dunn 2016; Mandelbaum forthcoming). It’s nonetheless important to the project of distinguishing perception from cognition to determine which effects are consistent with the encapsulation of perception.

3. Attention

3.1.—Attention and perception. Attention is the selection or prioritization of information for further processing (though how this should be understood is a matter of debate—see, e.g., Nanay 2010; Mole 2011; Prinz 2012; Wu 2014). Attention can consist in some information being processed rather than other available information, or in constrained forms of modulation, such as increasing “gain,” i.e., signal strength, and “tuning,” i.e., noise reduction (Ling et al. 2009). Attentional modulation can increase spatial resolution (Carrasco & Barbot 2014), boost perceived contrast (Carrasco et al. 2004), and warp perceived distance between items (Vickery & Chun 2010).

In the visual system, attention comes in three broad forms. Spatial attention selects information from certain spatial locations and inhibits other locations. Spatial attention can be overt, as when you move your eyes to change your gaze, or covert, as when you maintain fixation on a single point but attend to something in your periphery. Feature-based attention selects representations of certain features and inhibits others; for example, you might search for your favorite red shirt in your drawer and attend to red over the other available colors. Finally, object-based attention selects representations of certain objects; for example, you might watch a flock of birds and attend to a particular bird, tracking it as it moves.

Cognition can direct all three varieties of visual attention. For instance, you might attend to a certain location because you expect something to appear there; you might attend to a certain feature because you want to find your shirt; and you might attend to a certain object because it’s a bird you intend to track. One might be tempted to conclude that these are all instances of cognition violating the encapsulation of perception.

Firestone and Scholl (2016) argue instead that at least some forms of attention merely constitute changing of inputs to otherwise encapsulated perceptual systems. An example would be when you choose to change where your eyes are fixated; though this is a cognition-driven change in perception, it operates not by violating encapsulation but instead by moving the eyes to change the inputs to perception. Though covert spatial attention doesn’t literally involve moving the eyes, it’s intuitively the same sort of effect and thus changes perception only by changing inputs (see also Deroy 2013).

Fiona Macpherson (2012) raises the possibility that feature-based attention directed by cognition seems to violate encapsulation (see also Mole 2015; Block ms). Unlike spatial attention, it’s not simply a change in where we are looking, and it seems moreover to require antecedent processing (e.g., it seems to require processing of red in order to attend to the red things in the room). A modularist may reply that attention does not only select sensory inputs, but also selects representations as inputs for downstream processing, even if those representations were the result of earlier processes.[2] Others argue that attention has widespread effects on perceptual processing that are not limited to prioritization and modulation of inputs (e.g., Mole 2015; Lupyan 2016; Clark 2016). Even Firestone and Scholl only take their argument to save encapsulation from “peripheral” (i.e., input-modulating) forms of attention, and say they are “sympathetic” (2016, 61) to an attack on encapsulation that focuses on non-peripheral forms of attention. They object only that such proposals have at present remained too abstract relative to the experimental evidence, thus leaving encapsulation-based theories of perception vulnerable to detailed accounts of non-peripheral forms of top-down attention.

3.2.—Attention and encapsulation. A methodological problem arises here: how do we determine which attentional effects might, and which might not, violate encapsulation? The debate, so stated, threatens to become about warring intuitions. The idea that covert spatial attention fails to violate encapsulation seems to rest on an intuitive parallel with overt spatial attention (e.g., Macpherson 2012, 28). Theorists like Macpherson (2012), Prinz (ms), and Block (ms) invite us to have the opposite intuitions about feature-based and (in Prinz’s case) object-based attention, since these forms of attention seem to involve a tighter connection between the contents of our intentions and the contents of our resultant perceptual states. Indeed, Pylyshyn’s classic formulation of cognitive penetration is of a “semantically coherent” causal relation between cognition and perception (1999, 342), and the notion of semantic coherence is hard to explicate without intuitions. For example, feature-based attention to red things that alters perception of redness in response to thoughts about redness seems to instantiate a causal, semantically coherent relation (MacPherson 2012; Block ms).

Pylyshyn’s influential formulation of cognitive penetration in terms of semantically coherent causal relations between thought and perception thus threatens to make mundane forms of attention into examples of cognitive penetration. Note, however, that the presence of a semantically coherent causal relation between a thought and the output of some perceptual process says nothing about stores of information. For all that Pylyshyn’s informal definition of cognitive penetration says, there could be semantically coherent causal relations between thoughts and percepts without those thoughts being part of the store of information available to the relevant perceptual process.[3] Typical formulations of cognitive penetration in the literature therefore fail to track the notion of violation of encapsulation (though see note 3; cf. Gross 2017).

If a representation is held in a system’s proprietary store, it is directly available to be accessed in running computations. No additional process is necessary for it to affect processing. Every computational system can immediately access its proprietary information store either implicitly—i.e., running algorithms that accord with it—or explicitly—i.e., activating the representation and having it enter into the online computational process. Access of proprietary information is direct or immediate in the sense that there’s no intervening process except the activation of the stored representation.

Consider, for example, the access of a belief stored in long-term memory in central cognition. Barring some sort of malfunction or independent intervention, once the belief is accessed (which, since it’s explicitly stored, amounts to activating the representation), it will enter into central cognitive processes like inference (Braine & O’Brien 1998) and facilitating lexical recognition (Meyer & Schvaneveldt 1971). The fact that representations stored in a system currently running some computation are immediately entered into that computation via access explains why, for example, subliminally presenting the minor premise in a modus ponens argument after consciously presenting the major premise results in acquisition of the conclusion (Reverberi et al. 2012). Assuming that modus ponens describes an algorithm implemented in central cognition, then once the major premise (e.g., ‘If there’s an X then there’s a Y’) is represented in central cognition, activating the minor premise (e.g., ‘There’s an X’) is causally sufficient to yield the conclusion as output (e.g., ‘There’s a Y’). The representation, once accessed, is integrated with currently running processes to yield an output.

It may be clear at this point why an effect of cognition on perception mediated by attention cannot violate encapsulation: it is not a form of information access. A state in cognition may cause an attentional effect on perceptual processing and thereby alter the output of perception. Moreover, it may do so in a semantically coherent way, such that the output of perception is semantically related to the state in cognition. But the state in cognition isn’t thereby just another representation housed in the perceptual system’s store of information. Instead, it can only exert an influence on the perceptual system by way of attentional prioritization and modulation. If a representation in cognition can only affect perceptual processing via attention, that immediately separates it from representations in the relevant proprietary information store; the latter are accessed directly, while the former isn’t. States in cognition that can only affect perception via attention are thus never part of the store of information proprietary to a perceptual system, and so don’t violate the encapsulation of perception from cognition.[4]

Take Fig. 1, which to an innocent eye may appear to contain a chaos of dots. However, upon learning that the image depicts a Dalmatian, the dog may become visible. Suppose that this effect works through activating your concept of Dalmatians and using conceptual knowledge of what they look like to guide which locations and features you attend to, thereby affecting shape perception in a semantically coherent way. So described, there is no reason to say additionally that this cognitive information is accessed by the shape-perception process. The cognitive states instead guide spatial, feature-based, and, ultimately, object-based attention, and these forms of attention modulate perceptual processing. The store of information proprietary to the relevant shape-perception processes need not be expanded to incorporate those cognitive states.

Figure 1—Top-down attention at work

Christopher Mole (2015) cites Kravitz and Behrmann (2011) as providing experimental evidence for top-down attention operating within (rather than before) perceptual processing. Kravitz and Behrmann found effects of attentional cues on a letter-discrimination task that were driven by the shape, color, and location of previewed objects. A cue would improve letter discrimination not only if the letter appeared at the same location or in the same object as the cue, but also if it appeared in the location of another object that shared features (e.g., shape or color) with the object in which the cue appeared. Mole (as well as Kravitz and Behrmann) argues that spatial, feature-based, and object-based attention conspire to organize perceived scenes and guide perceptual discriminations. Mole argues further that this interaction can be sensitive to learned categories such as letter identities (2015, 230–231).

As mentioned above, a modularist could reply that these interactions of attentional effects take place at the output-input interfaces of hierarchically nested modules. But the point presently at issue is that encapsulation isn’t violated even if attention operates within modular processing. Attention is the prioritization and modulation of perceptual signals, not a form of information access. Prioritization and modulation within modules may, for example, affect which stored information is accessed in online perceptual processing by biasing competition between signals for “representation, analysis, or control” (Desimone & Duncan 1995, 194; see Mole 2015, 231ff). The cognitive states that drive such attentional effects, however, are not thereby among the information accessed and computed over in the relevant perceptual processes.

One might object that attention simply is perceptual processing. Mole, for instance, writes that attention is “inherent to the basic structure of the neural architecture” of perception (2015, 233). If attention is a perceptual process, one might argue, then cognitively driven attention is ipso facto a case where a perceptual process accesses cognition. But this claim, depending on how it’s meant, is either trivial or false. If the claim is merely that attention (qua perceptual process) accesses information in cognition, then it’s trivial; nobody on any side of the debate about encapsulation would deny that attention can be driven by cognitive states. If, on the other hand, the claim is that the independent perceptual processes modulated by attention thereby access information in cognition, then it’s false. There is a clear difference between (i) a process that accesses representation R and (ii) a process that’s affected by a separate process that accesses R. The latter process does not access R, and therefore does not have R within its store of information.

The argument in this paper thus does not require arguing that attention is a separate system from perception, but only that attention is not identical to the processes (e.g., distance perception) it modulates. Since attention modulates such processes, it cannot be identical to such processes, even if it is genuinely part of the perceptual system and is deeply intertwined with the processes it modulates. Perception of contrast, for example, can be modulated by attention (Carrasco et al. 2004). This attentional modulation may dramatically alter the perception of contrast to such an extent and with such regularity that contrast is never computed independently of attentional modulation (I don’t mean to suggest that this is actually true). Attention may therefore be “inherent to the basic structure of the neural architecture” of the perception of contrast. Nonetheless, the perceptual computation of contrast is not identical to the attentional modulation of that computation. If they were identical, it would not make sense to say that the one modulates the other. The fact that attention can modulate perceptual processing and can be driven by cognitive states therefore does not entail that the modulated perceptual processing accesses and computes over those cognitive states.

Consider a putative case where a cognitive state is genuinely accessed by perception. For example, suppose that a visual subsystem that implements perception of distance can access a desire for the perceived object and thereby make it appear closer (Balcetis & Dunning 2010). In that case, the store of information accessible to the distance-perception system includes desires stored in central cognition, and these desires can be computed over by the distance-perception system. Suppose instead, however, that the desire guides attention such that you attend more to things you want, and the perceptual signal is thus boosted (even, we can stipulate, at a point within the relevant perceptual process rather than at the input stage). Boosting the signal then causes the object to appear larger and closer. In the second case, there’s no explanatory reason to say the desire is part of the store of information accessible to the distance-perception system. The only “perceptual process” to which the desire is accessible is the deployment of attention.

It turns out that Firestone and Scholl’s question of whether attention is merely a changing of inputs is largely beside the point. Even if top-down attention operates within the processing of a module, it doesn’t violate the encapsulation of that module. The module can still retain a proprietary store of information that affects its outputs without being mediated by attention or any other mechanism (aside from information access). There can thus, in principle, be widespread effects of top-down attention on “every stage and level of processing” (Clark 2016) without violating the encapsulation of perception.

4. Larger Issues

A reader familiar with the philosophical literature on cognitive penetration might come away with the following question: Who cares about informational encapsulation? The idea that cognition affects the outputs of perception has serious consequences for epistemology (e.g., Siegel 2012; Deroy 2013), aesthetics (Stokes 2014), the theory-observation distinction (Churchland 1988), and even the role of implicit bias in police shootings (e.g., Correll et al. 2002). The technical notion of encapsulation, on the other hand, is purely a construct of theories of mental architecture. The thesis that perceptual systems are distinguished from cognitive systems because the former are encapsulated from the latter is important for answering foundational questions in cognitive science about the basic structure of the mind (Quilty-Dunn 2017). It’s far from obvious that this thesis should be expected to do important work in epistemology, ethics, or the theory-observation distinction (contra Fodor 1983; 1985).

Some theorists (e.g., Stokes 2014) advocate for a merging of all these various interests in adjudicating putative cases of cognitive penetration. One of the upshots of this paper is that these interests should not be so merged. Widespread effects of top-down attention may not threaten the informational encapsulation of perceptual systems, but they may nonetheless have serious consequences for the epistemic status, theory-ladenness, and moral import of perception. A percept distorted by top-down attention guided by the belief that p may be used to justify the belief that p, for example, thereby generating the kind of circular patterns of justification that worry Susanna Siegel (2012; 2013).

There are surely constraints on the ways in which cognition can affect perception via attention. For example, cognitively driven attention can enable you to see the Dalmatian in Figure 1; but while you may associate Dalmatians with firefighters, attention alone cannot make you see a firehouse in the image. This sort of limitation means attention does not completely erase the epistemic role of perception in constraining what it is reasonable to believe about your environment. But cognitively driven attention can still wreak epistemic havoc. For example, a fearful perceiver might boost signals pertaining to snake-like features of ambiguous stimuli, resulting in a percept as of a snake (Prinz & Seidel 2012). This instantiates a circular pattern whereby a fear-state influences perception, and the percept then purports to justify the fear-state (see Siegel 2013 for more discussion of the epistemic role of attentional top-down effects).

The debate about top-down effects has proceeded in a way that ties together questions about information access with questions about whether perceptual processing is influenced by cognition in theoretically and normatively significant ways. Dustin Stokes’s (2014; forthcoming) “consequentialist” methodology for determining whether an effect constitutes cognitive penetration is a particularly explicit example, but the tendency runs subliminally through much of the literature. Questions about whether cognition penetrates perception (i.e., whether cognition exerts a semantically coherent effect over how the perceptual process operates) are not identical to questions about whether cognition violates the encapsulation of perception (i.e., whether cognitive states are accessed and computed over by perceptual processes).

Effects mediated by attention need not violate encapsulation to “demonstrate an epistemologically significant way in which the mechanisms that are responsible for our thinking and judging are entwined with the mechanisms that are responsible for our perception of the world” (Mole 2015, 219). And in cases where top-down attention is shaped by our theories or our implicit biases, the consequences may be of moral and theoretical concern as well. Research on these topics shouldn’t be beholden to technical concerns about whether perceptual systems can be architecturally distinguished from cognition by appeal to informational encapsulation.

References

Adams, W.J., Graf, E.W., & Ernst, M.O. (2004). Experience can change the “light-from-above” prior. Nature Neuroscience 7(10), 1057–1058.

Balcetis, E., & Dunning, D. (2010). Wishful seeing: More desired objects are seen as closer. Psychological Science 21(1), 147–152.

Bhalla, M., & Proffitt, D.R. (1999). Visual-motor recalibration in geographical slant perception. Journal of Experimental Psychology: Human Perception & Performance 25, 1076–1096.

Block, N. (ms). A joint in nature between cognition and perception without modularity of mind.

Braine, M.D.S., and O’Brien, D.P., eds. (1998). Mental logic. Mahwah, NJ: Erlbaum.

Carrasco, M., & Barbot, A. (2014). How attention affects spatial resolution. Cold Spring Harbor Symposia on Quantitative Biology 79, 149–160.

Carrasco, M., Ling, S., & Read, S. (2004). Attention alters appearance. Nature Neuroscience 7(3), 308–313.

Chomsky, N. (1986). Knowledge of Language: Its Nature, Origin, and Use. Westport, CT: Praeger.

Clark, A. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences 36(3), 181–204.

Correll, J., Park, B., Judd, C.M., & Wittenbrink, B. (2002). The police officer’s dilemma: Using ethnicity to disambiguate potentially threatening individuals. Journal of Personality and Social Psychology 83(6), 1314–1329.

Deroy, O. (2013). Object-sensitivity versus cognitive penetrability of perception. Philosophical Studies 162(1), 87–107.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience 18, 193–222.

Devitt, M. (2005). Ignorance of Language. New York: OUP.

Durgin, F.H., Baird, J.A., Greenburg, M., Russell, R., Shaughnessy, K., & Waymouth, S. (2009). Who is being deceived? The experimental demands of wearing a backpack. Psychonomic Bulletin & Review 16(5), 964–969.

Firestone, C. & Scholl, B.J. (2014). Enhanced visual awareness for morality and pajamas? Perception vs. memory in ‘top-down’ effects. Cognition 136, 409–416.

Firestone, C. & Scholl, B.J. (2016). Cognition does not affect perception: Evaluating the evidence for “top-down” effects. Behavioral and Brain Sciences 39, 1–72.

Fodor, J. (1981). Introduction: Some notes on what linguistics is talking about. In N. Block (ed)., Readings in the Philosophy of Psychology, Vol. 2 (Cambridge, MA: Harvard University Press).

—. (1983). Modularity of Mind. Cambridge, MA: MIT Press.

—. (1985). Précis of The Modularity of Mind. Behavioral and Brain Sciences 8(1), 1–42.

Gantman, A.P., & Van Bavel, J.J. (2014). The moral pop-out effect: Enhanced perceptual awareness of morally relevant stimuli. Cognition 132, 22–29.

Ling, S., Liu, T., & Carrasco, M. (2009). How spatial and feature-based attention affect the gain and tuning of population responses. Vision Research 49(10), 1194–1204.

Lupyan, G. (2015). Cognitive penetrability of perception in the age of prediction: Predictive systems are penetrable systems. Review of Philosophy and Psychology 6(4), 547–569.

—. (2016). Not even wrong: The “it’s just X” fallacy. Behavioral and Brain Sciences.

Macpherson, F. (2012). Cognitive penetration of colour experience: Rethinking the issue in light of an indirect mechanism. Philosophy and Phenomenological Research 84(1), 24–62.

Mandelbaum, E. (2016). Attitude, inference, association: On the propositional structure of implicit bias. Noûs 50(3), 629–658.

—. (forthcoming). Seeing and conceptualizing: Modularity and the shallow contents of perception. Philosophy and Phenomenological Research.

Meyer, D.E. & Schvaneveldt, R.W. (1971). Facilitation in recognizing pairs of words: Evidence of a dependence between retrieval operations. Journal of Experimental Psychology 90, 227–234.

Mole, C. (2011). Attention is Cognitive Unison. Oxford: OUP.

—. (2015). Attention and cognitive penetration. In J. Zeimbekis & A. Raftopoulos (eds.), The Cognitive Penetrability of Perception: New Philosophical Perspectives (Oxford: OUP), 218–238.

Nanay, B. (2010). Attention and perceptual content. Analysis 70(2), 263–270.

Pietroski, P. (2008). Think of the children. Australasian Journal of Philosophy 86(4), 657–669.

Prinz, J. (2006). Is the mind really modular? In R. Stainton (ed.), Contemporary Debates in Cognitive Science (Malden: Blackwell), 22–36.

—. (2012). The Conscious Brain: How Attention Engenders Experience. Oxford: OUP.

—. (ms). Faculty psychology without modularity.

Prinz, J., & Seidel, A. (2012). Alligator or squirrel: Musically induced fear reveals threat in ambiguous figures. Perception 41(12), 1535–1539.

Proffitt, D.R. (2009). Affordances matter in geographical slant perception: Reply to Durgin, Baird, Greenburg, Russell, Shaughnessy, and Waymouth. Psychonomic Bulletin & Review 16(5), 970–972.

Pylyshyn, Z. (1999). Is vision continuous with cognition? The case for cognitive impenetrability of visual perception. Behavioral and Brain Sciences 22(3), 341–365.

Quilty-Dunn, J. (2016). Iconicity and the format of perception. Journal of Consciousness Studies 23(3-4), 255–263.

Quilty-Dunn, J. (2017). Syntax and Semantics of Perceptual Representation. Dissertation, The Graduate Center, CUNY.

Ramachandran, V.S. (1988). Perceiving shape from shading. Scientific American 256(8), 76–83.

Reverberi, C., Pischedda, D., Burigo, M., and Cherubini, P. (2012). Deduction without awareness. Acta Psychologica 139, 244–253.

Scholl, B. (1997). Reasoning, rationality, and architectural resolution. Philosophical Psychology 10(4), 451–470.

Shea, N. (2014). Distinguishing top-down from bottom-up effects. In D. Stokes, M. Matthen, & D. Biggs (eds.), Perception and Its Modalities (Oxford: OUP), 73–91.

Siegel, S. (2012). Cognitive penetrability and perceptual justification. Noûs 46(2), 201–222.

Siegel, S. (2013). Can selection effects on experience influence its rational role? In T. Gendler (ed.), Oxford Studies in Epistemology, Vol. 4 (Oxford: OUP), 240–270.

Stokes, Dustin. (2014). Cognitive penetration and the perception of art. Dialectica 68 (1), 1–34.

Stokes, Dustin. (forthcoming). Attention and the cognitive penetrability of perception. Australasian Journal of Philosophy.

Sun, J., & Perona, P. (1998). Where is the sun? Nature Neuroscience 1(3), 183–184.

Vickery, T.J., & Chun, M.M. (2010). Object-based warping: An illusory distortion of space within objects. Psychological Science 21(12), 1759–1764.

Wu, W. (2014). Attention. New York: Routledge.

Notes

[1] Though ‘implicit’ in the sense of being unconscious does not entail ‘implicit’ in the sense of being unavailable for use in any mental process, the latter will entail the former given the reasonable assumption that only token representations can be conscious. Any token representation fails to be implicit in the latter sense, and thus the scope of explicit (in the sense of conscious) representation is limited to explicit (in the sense of available) representation.

[2] This reply requires the assumption that the visual system consists of a hierarchy of modules, with subsystems whose outputs function as inputs to other subsystems.

[3] Raftopoulos and Zeimbekis (2015, 27ff) argue that Pylyshyn (1999; 2003) endorses a “vehicle criterion,” according to which “perception has to draw directly on the informational resources of a cognitive system” (Raftopoulos & Zeimbekis 2015, 27) to constitute cognitive penetration. However, the passages they cite merely specify that attention cannot operate “within the early-vision system itself” (Pylyshyn 2003, 90; see also Pylyshyn 1999, 344), a specification that is sometimes cast in terms of “directness” (Gross 2017; Block ms). Cognitively driven attentional modulation that alters “the early-vision system itself” in a semantically coherent way would therefore seem to suffice for cognitive penetration by Pylyshyn’s lights. Like Firestone and Scholl, Pylyshyn’s argument against violations of encapsulation by attention rests on the empirical assumption that attention operates only on the inputs and outputs of perceptual systems rather than within the operations of those systems.

Pylyshyn does sometimes cast impenetrability in terms of information access (e.g., 1999, 360), and Gross (2017) stipulates that cognitive penetration involves “directness” in terms of operation within perceptual processing as well as “directness” in terms of information access. A primary goal of this paper is to pull these various ideas apart and argue both that encapsulation should be understood solely in terms of information access and that attentional effects do not violate encapsulation so understood. The notion of cognitive penetration in terms of semantically coherent effects (even building in “directness” in terms of operation within perceptual processing rather than merely on inputs) is distinct from encapsulation and has normatively significant upshots. Pace Gross, I think this notion of cognitive penetration should therefore be articulated independently of questions about information access and encapsulation.

[4] One can also think of information access as defined by the array of parameters that can be set in a computation. For example, distance perception may contain a parameter for whether the perceived object is desired (Balcetis & Dunning 2010)—e.g., if the object is desired, then the parameter is set to YES. Effects mediated by attention don’t involve a parameter being set directly by the presence of a state in cognition, so such effects don’t violate encapsulation.

[/expand]

[expand title=”Invited Comments from EJ Green (MIT)” elwraptag=”p”]

Comments on Jake Quilty-Dunn, “Attention and Encapsulation”

E.J. Green

Department of Linguistics and Philosophy

MIT

Jake Quilty-Dunn’s paper, “Attention and Encapsulation,” offers a thoughtful, concise, and provocative study of the implications of top-down attention for theories of the perception/cognition border. Quilty-Dunn defends what he acknowledges to be an “extreme thesis”—namely, that “no effect of attention, either on the inputs to perceptual processing or on perceptual processing itself, constitutes a violation of the encapsulation of perception” (Quilty-Dunn, 1). A secondary theme of Quilty-Dunn’s paper is that debates surrounding encapsulation ought to be separated from debates about cognitive penetration, since, he argues, perception may be cognitively penetrable while remaining encapsulated.

Like Quilty-Dunn, I am sympathetic to the idea that progress in characterizing the perception/cognition border will require distinguishing among alternative ways in which cognition might “coherently” affect perception. I also agree that there are limitations on the kinds of influences that top-down attention can facilitate, and that these should be catalogued. And, finally, I agree that there are surely ways for cognition to influence perception that “wreak epistemic havoc” (Quilty-Dunn, 10), irrespective of whether such influences count as either cognitive penetration or violations of encapsulation.

In these comments, I’ll focus primarily on two issues. First, I’ll raise questions about how we should understand the notions of encapsulation and information access employed in the paper. Second, I’ll press Quilty-Dunn to clarify his central case for the conclusion that no influence mediated by attention can constitute a violation of encapsulation.

1. Information Access and Encapsulation

Quilty-Dunn writes: “Encapsulation is a matter of information access” (1). It is central to his argument that attention is not a mechanism by which perception comes to “access” information stored in cognition. So, to properly evaluate the argument, we first need to understand the notion of information access. Fortunately, Quilty-Dunn devotes a good deal of discussion to this issue.

Quilty-Dunn proposes that we should understand information access, and so the requirements for encapsulation, in terms of the store of information “available” to a system. There are two ways in which information can be available to a system. First, it can be explicitly available, in which case the system stores a token representation with certain content, and retrieves or manipulates this representation during online computation. Quilty-Dunn writes:

When the system is fed its inputs, it accesses this representation (and other stored information) and derives its outputs in accordance with whatever algorithms it implements. The notion of access involves the activation of a token representation and its functioning directly and without independent intervention in the relevant processes. (3)

This variety of availability is distinguished from implicit availability. In these cases there is no stored representation that represents the relevant information explicitly. Rather, the information is merely “embodied” in the state transitions that the system is disposed to undergo. Using a hypothetical case in which the visual system’s assumption that light comes from above is implicit in this sense, he writes:

In the implicit case…there’s no such representation stored anywhere. Instead, the algorithms implemented by the system accord with the principle that light comes from above and to the left. That information isn’t available for use by any process; it’s merely implicit (or “embodied”—e.g., Devitt 2006, 45) in the operations of the system (Shea 2014). (2)

Quilty-Dunn counts information as within a system’s “proprietary store” as long as it is either explicitly or implicitly available. This leads to his account of encapsulation: “System A is encapsulated from System B when A has a proprietary store of information that excludes information stored in B” (3).

But under what conditions are pieces of information stored in B “excluded” from A’s proprietary information store? Unfortunately, I find this notion somewhat unclear. I’ll illustrate this with three types of cases. It is unclear to me which, if any, of these Quilty-Dunn would count as cases in which information stored in system B is entered into system A’s proprietary information store, thus violating A’s encapsulation with respect to B.

First, there are cases of content duplication. In these cases, system B reliably causes system A to token representations that have the same contents as representations stored in system B, but which are token-distinct and different in format from the representations stored in system B. Second, there are cases of formal duplication. In these cases, system B can reliably cause system A to token representations that have both the same content and the same formal/syntactic properties as representations stored in system B, but which are token-distinct from representations stored in system B. Third, there are cases of token vehicle availability. In these cases, token representations (understood as mental particulars with certain contents) stored in system B are themselves retrieved by system A.

My first question is this. Do any of these cases suffice for a violation of encapsulation? And if one or more of these cases do not suffice, why do they not suffice?

Certain passages suggest that Quilty-Dunn requires something quite strong indeed for perception to access information from cognition, and certainly something stronger than content duplication. Consider his remarks on pp. 8-9. Here, Quilty-Dunn grants that attention can access information in cognition, but argues that as long as attention is distinct from the perceptual processes that it modulates (e.g., perception of contrast or saturation), this is not a way in which cognitive information can enter perception’s proprietary store. In support of this, he writes:

There is a clear difference between (i) a process that accesses representation R and (ii) a process that’s affected by a separate process that accesses R. The latter process does not access R, and therefore does not have R within its store of information. (9)

In other words, if a process is affected only via an intervening process that accesses representation R, then R (and presumably also the information it encodes?) is therefore not within the former’s store of information. It seems, then, that for Quilty-Dunn, information access must be direct or unmediated. If information from system B can only influence system A via an intervening process, then information in B is therefore excluded from system A’s proprietary store.

Note, however, that if this is true, then content duplication—no matter how pervasive and systematic—is not sufficient for information access. To illustrate, suppose that perception and cognition compute in formally distinct languages that have the same expressive power. While cognition can freely “transmit” information to perception, it can only do so by way of a third system—a compiler—that functions to translate sentences in the language of thought into sentences in the language of perception. If content duplication were sufficient for information access, then this would be a case in which perception accesses information from cognition, despite cognitive influences on perception always being indirect.

In any event, one might argue that any notion of information encapsulation worthy of the name should count pervasive content duplication as a case of non-encapsulation. “Information” is typically construed in either a probabilistic or a semantic sense (e.g., Dretske 1981). I take it that Quilty-Dunn does not intend it in the former, purely information-theoretic sense, but rather to mean, roughly, the content that a representation carries. But, if so, then shouldn’t we count cases in which content is reliably but indirectly transmitted between cognition and perception as cases in which information from cognition enters perception’s proprietary information store, and so is not excluded from it? After all, there is no respect in which perception is “blocked” from representing and computing over the contents of one’s beliefs and desires. It’s just that it can only do so by way of an intervening process that helps cognition and perception to communicate.

Of course, none of this would show that we couldn’t distinguish perception from cognition. This would be particularly plausible if, say, the two computed in formally distinct languages and could only communicate via an intermediary. But, as Quilty-Dunn rightly notes (p. 4), perception’s being distinct from cognition doesn’t entail its being encapsulated from cognition.

Thus, there are two views on the table. One is that information from cognition is excluded from perception’s proprietary information store. Another is that influences of cognition on perception are always mediated by some further psychological process (e.g., attention). My second question, then, is this. What is the relation between these views? Does the latter entail the former? If so, what secures this entailment, and why isn’t reliable but indirect content transmission sufficient for perception to access information from cognition?

2. Attention and Encapsulation: Quilty-Dunn’s Argument

The discussion to this point has primarily concerned the notion of encapsulation, and in particular what kinds of information transmission would violate it. But perhaps we need not clear up these issues to appreciate that attentional effects simply can’t violate encapsulation. So let’s turn to Quilty-Dunn’s main argument.

The central line of thought appears on page 7:

It may be clear at this point why an effect of cognition on perception mediated by attention cannot violate encapsulation: it is not a form of information access. A state in cognition may cause an attentional effect on perceptual processing and thereby alter the output of perception. Moreover, it may do so in a semantically coherent way, such that the output of perception is semantically related to the state in cognition. But the state in cognition isn’t thereby just another representation housed in the perceptual system’s store of information. Instead, it can only exert an influence on the perceptual system by way of attentional prioritization and modulation.

Quilty-Dunn argues in this passage that any effect of cognition that is mediated by attention cannot be a case in which perception accesses information stored in cognition. The reason is that attentional influences are limited to prioritization (biasing perceptual selection) and modulation (altering the gain or tuning of perceptual responses), and these aren’t mechanisms by which a state in cognition comes to be “housed in the perceptual system’s store of information.” But if a state in cognition does not come to be so housed, then information in cognition fails to be added to perception’s information store, so encapsulation is preserved.

Thus, the argument relies on a claim about the limits of attention-mediated influences, and moves eventually to a conclusion about encapsulation. I’ll first consider the status of the initial claim, and then I’ll raise some concerns about the transition from this to the conclusion.

Granting the rest of the argument for now, it is crucial for Quilty-Dunn that attentional influences are exhausted by prioritization and modulation. Otherwise, we could not be certain that there are no attentional effects that work in other ways, and that do result in cognitive information’s becoming housed in perception’s information store. This claim, however, may be construed in either of two ways. Either it is an empirical claim warranted by psychological and neurophysiological evidence, or it is an a priori claim that falls out of the concept of attention.

If it is the former, then what is the evidence that attentional influences are strictly limited in this manner? Of course, attentional prioritization and modulation have received extensive investigation (Carrasco 2011). But this doesn’t establish that these are the only influences attention may exert. Some vision scientists have recently argued for quite rich views according to which attention, for example, “warps the entire semantic space, expanding the representation of nearby semantic categories at the cost of more distant categories” (Çukur et al. 2013). Perhaps such views are unwarranted, or perhaps the functional roles they carve out for attention can be shown to reduce to some combination of prioritization and modulation. In any case, if Quilty-Dunn means to infer limits on possible attentional effects from the vision science, one wonders whether a more extensive survey of the literature is needed.

Quilty-Dunn may instead hold that it is a priori that attention can only involve prioritization and modulation. If so, then he is effectively arguing that no amount of evidence could ever show that an effect mediated by attention violated encapsulation. If we found that an influence did violate encapsulation, then we would be mistaken in calling it “attention.” Indeed, if researchers uncovered some influence beyond prioritization and modulation and labeled it “attention,” then we would need to conclude that they were misdescribing their topic of investigation.

Quilty-Dunn’s introduction offers some textual basis for interpreting him as making the a priori claim. For example, he describes his topic as among the “a priori disputes about which mental processes constitute violations of encapsulation” (1). However, it is not entirely clear from this which part of the dispute is supposed to be a priori. Is it a priori both that attentional effects are limited to prioritization and modulation and that, given this, effects of attention can’t violate encapsulation? Or is only the second part supposed to be a priori, with the claim about attention’s limitations justified by vision science?

Thus, my third question is this. Is the claim that attentional effects are limited to prioritization and modulation intended as an empirical claim supported by vision science, or is it an a priori claim that falls out of the concept of attention? (Or perhaps somewhere in between?) Moreover, if it is the latter, is the relevant concept of attention a folk concept, or is it a theoretical concept supplied by cognitive science?

Let’s now consider the remainder of the argument—the move from the claim about the limited effects of attention to the conclusion that top-down influences mediated by attention can’t violate encapsulation. This goes by way of an intermediate transition. Quilty-Dunn argues that when cognition exerts an effect on perception via attention, then “the state in cognition isn’t thereby just another representation housed in the perceptual system’s store of information.” Accordingly, even if attention mediates semantically coherent effects, it clearly does not mediate information access or availability. Since the latter is needed in order to violate encapsulation, attention can’t violate encapsulation.

However, one might worry that this argument is incomplete, given the account of encapsulation provided in section 2. Recall that Quilty-Dunn distinguishes two types of information availability. Information is available within a system’s proprietary information store just in case it is either explicitly available or implicitly available by virtue of being embodied in perceptual algorithms. Quilty-Dunn’s argument in section 3, however, focuses primarily on the former type of availability. He argues that attention is not a mechanism by which cognitive states can enter perception’s information store. In other words, attention doesn’t allow a cognitive representation, and so the information it explicitly represents, to be transferred into the store of explicitly represented information that perceptual processes consult.

But if perception’s proprietary information store includes both information explicitly available and information implicitly available, then there should be two ways of violating encapsulation. First, cognition might make information P explicitly available to perception by supplying new symbols that explicitly encode P. Second, cognition might make information P implicitly available by, say, modifying perceptual algorithms so that they come to embody P. Quilty-Dunn argues that attention can’t do the former. But might it do the latter? Does he believe it is simply obvious that it cannot?

Here is one reason for thinking that the issue is not so clear-cut. Perceptual algorithms are, roughly, procedures for computing mappings from proximal inputs to distal outputs (Marr 1982). Accordingly, a change in input-output mapping indicates a change in algorithm. But notice that Quilty-Dunn grants that attention can modify the input-output mappings associated with particular perceptual processes, rather than merely changing the inputs to those processes. After all, he does not accept Pylyshyn’s (1999) claim that attention acts wholly before perceptual processing begins (Quilty-Dunn, pp. 9-10).

So my fourth question is this. When top-down attention alters the input-output mapping associated with a perceptual process (e.g., perception of contrast, saturation, or motion direction), can it thereby alter the algorithm associated with that process, at least temporarily? If not, why not? But if so, do such effects ever alter the information implicitly embodied in perceptual algorithms? And if they do, then how can we be sure that they never violate encapsulation?

Finally, I want to briefly consider Quilty-Dunn’s critique of Mole (2015) on pp. 8-9. Mole relies on a study by Kravitz and Behrmann (2011). In this study, participants were first cued to a particular location on an object, and then had to respond to a target that appeared either at the cued location or elsewhere in the display. As Quilty-Dunn notes, one key finding of this study was that responses were faster not only when the target appeared at the cued location or on the cued object, but also when it appeared on a different object that had the same shape as the object on which the cue appeared. Quilty-Dunn argues that these data can be explained purely by the attention-based prioritization of certain visual features (e.g., shape). However, one further finding from this study—which Mole treats as particularly important to his case for cognitive penetration—is that responses were also facilitated when the target appeared on an object that belonged to the same alphanumeric category as the object on which the cue appeared (e.g., an H and an h, versus an H and a 4). Mole concludes:

The cue must therefore prompt a different allocation of attention, depending on whether the screen contains an H and an h, or an H and a 4. Since registering that fact requires learnt concepts to be brought to bear, attention and conceptual thought are shown to be at least somewhat intertwined. (2015: 231)

This last finding might be deemed a more serious challenge to encapsulation than the former ones. Because visual attention in this case spread on the basis of shared alphanumeric category, rather than merely shared color or shape, one might hypothesize that cognition did expand perception’s information store. The story would go like this. Perception starts out only representing objects as having “low-level” features (color, shape, texture, motion, etc.), but doesn’t classify stimuli into alphanumeric categories. However, when alphanumeric category is made salient, cognition injects this content into perception, leading perception to represent objects as, say, particular letters or numerals. With this new classification ability in place, objects that belong to the same alphanumeric category as a cued object can be systematically prioritized for selection. This, I take it, would be an account on which cognition expands perception’s proprietary information store to include certain categories that it didn’t initially include.

I do not believe such a story is forced by Kravitz and Behrmann’s findings, and I am sure that Quilty-Dunn would agree. (In fact, I suspect that he might reply that perception is capable of alphanumeric categorization without cognitive influence.) However, the point is that this study plausibly demonstrates something beyond mere object- and feature-based attention. Kravitz and Behrmann take their findings to demonstrate category-based attention. Thus, my fifth question is this. How does category-based attention fit within an encapsulation view, and why should we think that this account of category-based attention is the correct one?

I’ll conclude with a sixth question. Debates over encapsulation (and impenetrability, for that matter) often follow a standard pattern. Person A cites an experimental or introspective observation and argues that it shows that perception is non-encapsulated (or penetrable). Person B replies that, in fact, the datum can be explained in a manner consistent with encapsulation (or impenetrability). Too seldom, however, does B also mention the types of evidence that she would accept as establishing a violation of encapsulation. So my last question is this. Can Quilty-Dunn describe a hypothetical empirical finding that he would regard as clear, unambiguous evidence that perception is not encapsulated from cognition? This would at least give opponents of encapsulation an idea of where to look.

References

Carrasco, M. (2011). Visual attention: The past 25 years. Vision Research, 51(13), 1484-1525.

Çukur, T., Nishimoto, S., Huth, A. G., & Gallant, J. L. (2013). Attention during natural vision warps semantic representation across the human brain. Nature Neuroscience, 16(6), 763-770.

Dretske, F. I. (1981). Knowledge and the Flow of Information. Cambridge, MA: MIT Press.

Kravitz, D. J., & Behrmann, M. (2011). Space-, object-, and feature-based attention interact to organize visual scenes. Attention, Perception, & Psychophysics, 73(8), 2434-2447.

Marr, D. (1982). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. San Francisco, CA: W.H. Freeman.

Mole, C. (2015). Attention and cognitive penetration. In J. Zeimbekis & A. Raftopoulos (eds.),

The Cognitive Penetrability of Perception: New Philosophical Perspectives, pp. 218-237. Oxford: Oxford University Press.

Pylyshyn, Z. W. (1999). Is vision continuous with cognition? The case for cognitive impenetrability of visual perception. Behavioral and Brain Sciences, 22(3), 341-365.

[/expand]

[expand title=”Invited Comments from Wayne Wu (Carnegie Mellon)” elwraptag=”p”]

Comments on Jake Quilty-Dunn’s “Attention and Encapsulation”

Wayne Wu

Carnegie Mellon University

Although in the end, I do not agree with the conclusion, I very much enjoyed reading Quilty-Dunn’s essay. Our difference of view can be simply stated: I think the best empirical case for cognitive penetration is the case of top-down attention; Quilty-Dunn denies that any case can be made for cognitive penetration in attention. Our disagreement is rooted in differences regarding a core issue: What is attention?

Let me begin with a couple of points of agreement. I think Quilty-Dunn is correct that the epistemic significance of top-down attention effects can be separated from issues about informational encapsulation.[1] That is, many of the epistemic issues that attention raises can be pursued independently of whether attention is cognitively penetrated (this is the point of his helpful final section). The epistemic significance of attention is an area that deserves more concerted exploration. I also appreciate his focus on “computational” requirements, including operation over relevant information. Indeed, this is precisely what is needed to secure evidence for failures of encapsulation on empirical grounds. Much of the case for cognitive penetration among philosophers relies on behavioral results that underdetermine the actual mechanisms. Yet failure of encapsulation is precisely a claim about a type of information processing. My own view is that the gold standard for demonstrating encapsulation would be to ground the behavioral phenomena in clear computational and biological descriptions that merge multiple levels together in a way that shows how information from cognition is used by sensory systems.

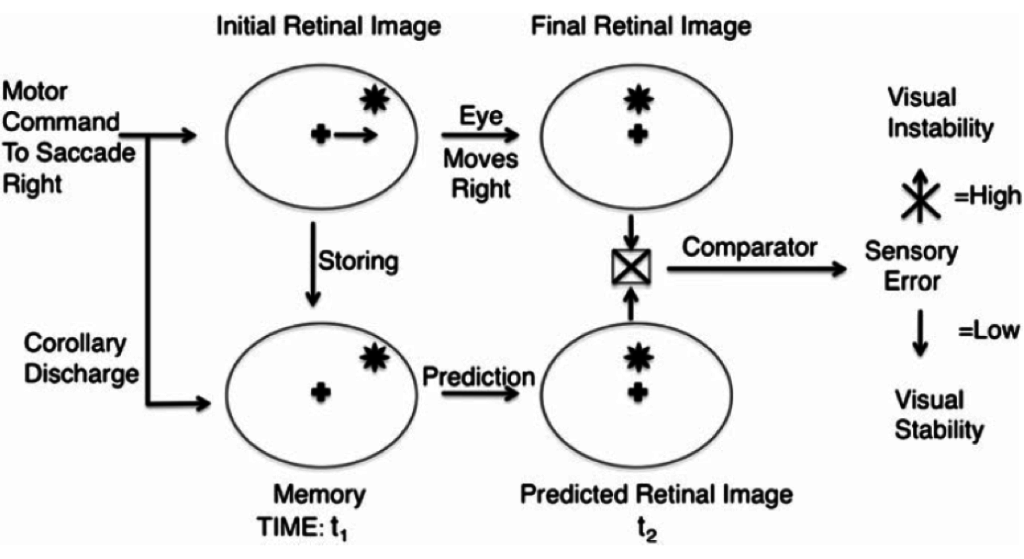

Ok, on to disagreements. In some papers of mine, I have emphasized that failure of informational encapsulation, as an empirical thesis, must be established by a multi-tiered approach that merges behavioral, computational and biological. One can think of this in terms of Marr’s three levels of explanation as a heuristic: begin with an analysis of failure of informational encapsulation, show what that might mean computationally for a given domain, and then identify the relevant biology that implements that computation. So, let’s begin with a case that even Fodor takes to be a case of failure of encapsulation in vision, namely a visuomotor interaction that is needed to keep the world stable when the eye generates saccades.

We can follow Quilty-Dunn’s sufficient condition (p. 3) as follows:

if A can access information held in B (and presumably compute over it), then A is not encapsulated from B

I would be happy to say that B informationally penetrates A, but that is not Quilty-Dunn’s preferred way of putting matters since he allows for semantically coherent covariation that falls short of failure of encapsulation. The case of why the world seems stable when the eye moves despite the sweep of the world image across the retina illustrates a case of failure of encapsulation, for to keep the world stable, the visual system requires information from the motor system. That is, if the visual system has motor information about which direction the eye moves, then it can compensate for the shift in the retinal image generating a stable percept despite movement. Here’s one depiction of the computation (from Wu, 2013):

The relevant motor representation is the corollary discharge signal that is used by visual working memory to predict the retinal image after eye movement. In the paper from which the figure is taken, I argue that intention informationally penetrates vision via maintaining spatial constancy through the motor signal (corollary discharge) but since there are worries about “directness” and since the case is not directly about attention, I shall forgo it (but see (Wu 2013) for discussion of directness).

What then is the right claim to make if attention were to show that perception, say vision, is informationally penetrated by cognition? In the case of top-down attention, in a paradigm form, we can say that the relevant cognitive state will be an intention. Now, at this point, it seems essential to have a clear view of what attention is. Quilty-Dunn seems to sides step the issue (fair enough, it’s a complicated matter). He notes, correctly, that theorists take attention to be “the selection or prioritization of information for further processing” though he notes that this is a controversial issue. It is worth emphasizing that more must be said since “selection of information for further processing” is not sufficient for attention in the relevant sense. It is not hard to find counterexamples to the sufficiency claim (I will leave that as an exercise for the reader), so it becomes necessary to say what the selection is for.

In my book, Attention (Wu 2014), I argued that there is a uniform conception of attention shared by all theorists of attention, namely that whatever else attention might be as information selection, paradigm forms of attention involve selection to guide task performance (this echoes Chris Mole’s (2013) idea of attention as cognitive unison in respect of performing tasks; Jesse Prinz’s (2012) notion of attention as selection of information for working memory is an instance of selection of attention for task). So, I don’t think this idea is controversial since it is embedded in the methodology of empirical work on attention: to control a subject’s attention to X, as required if one is going to study such attention, researchers make X relevant to and indeed necessary for correct task performance. If I want you to attend to colors rather than shapes, I make colors task relevant and then I measure your performance to ensure that you are responding just to colors (if you are at chance, then one question is whether you are attending at all). So, I don’t see that there is a controversy about one form of attention as informational selection for further processing, namely as selection for task. This is the conception operative in the experimental paradigms that form test cases for queries about cognitive penetration in attention.

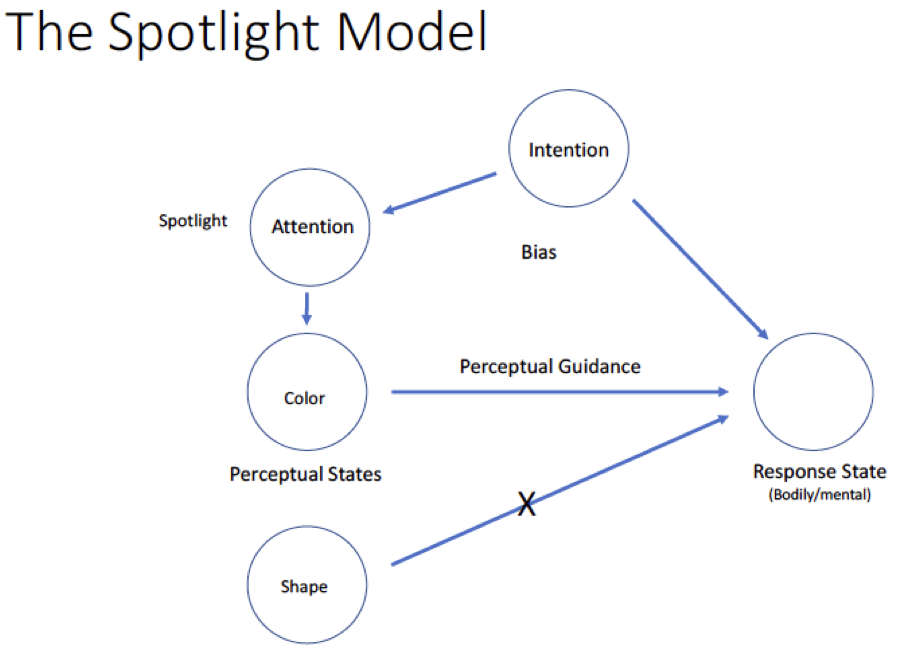

Securing this uncontroversial conception of attention allows us to see why intentions might informationally penetrate vision. Visual processing often serves action, but given the clutter of the visual field, how will visual processing serve action without some influence from intention? Quilty-Dunn does not deny this, so the question comes down to how intention might influence visual processing in a way that amounts to informational penetration (the failure of informational encapsulation).

At this point, everything comes down to the metaphysics of attention, which is why I think the issues would require more exploration in a fuller version of this paper. Quilty-Dunn’s thesis that “an effect of cognition on perception mediated by attention cannot violate encapsulation” requires a specific view of attention which is widespread, at least in metaphorical terms (the spotlight of attention), but in my mind wrong. This is a conception of attention as a distinct mechanism separate from cognition and perception which mediates between the two (it is supramodal, see Peterson and Posner (1990; 2012)). We can diagram it as follows, in light of biased competition: competition in perceptual processing that is resolved by a top-down, task-representing signal (intention). The spotlight is directed by cognition (intention) to influence perception:

The problem is that despite lip-service to this model, no one has discovered what the spotlight is (Allport 1993). Note that to deny attention as a spotlight or cause yielding perceptual modulations is not to deny that there are known networks that mediate different forms of attentional effects (Corbetta and Shulman 2011). The issue is whether anything can be identified as the spotlight (or choose your favorite causal metaphor). If you were to ask theorists of attention where to locate the spotlight, given that they seem to endorse it verbally, I suspect you will just make them uncomfortable and find that they quickly relinquish the challenge (this is almost routine in my experience speaking with a number of eminent vision and attention theorists; they study attentional effects in specific task contexts but not the attentional spotlight).

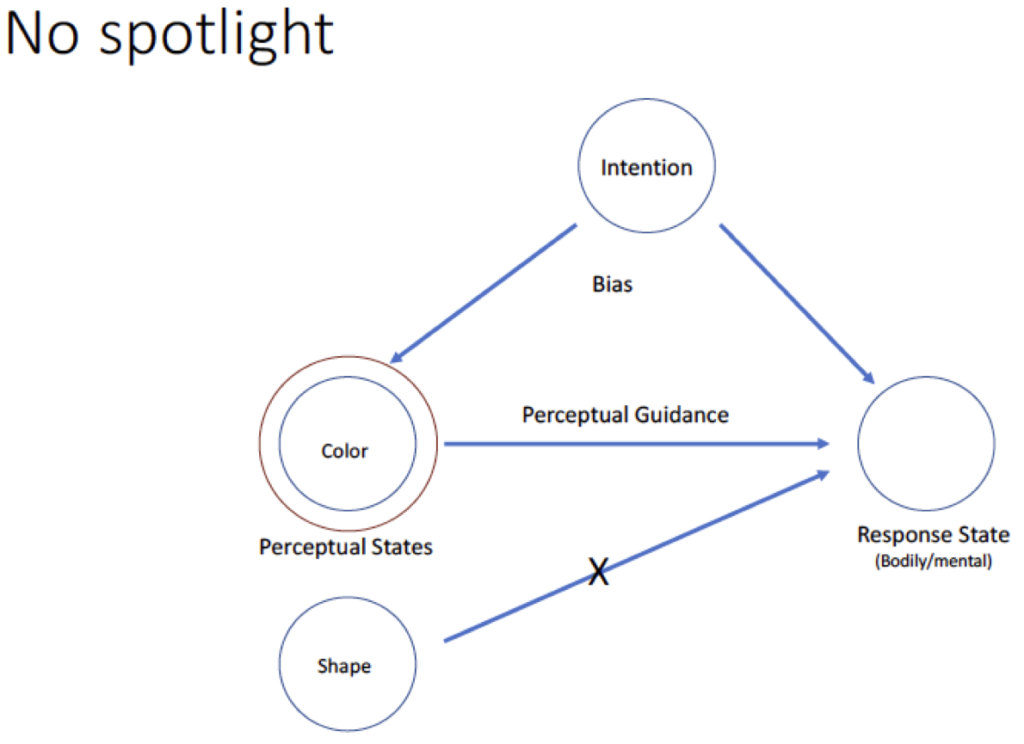

Further, we should not confuse the role of different brain areas in attention, say the frontal eye field or the superior colliculus (SC) in visual spatial attention, as identifying the spotlight. Thoughtful theorists who note the necessity of SC for attention do not thereby claim that the SC is the spotlight (Krauzlis, Lovejoy, and Zénon 2013). An alternative is just to see these brain areas as not the spotlight but as part of the circuitry that mediates intention modulating vision, namely as explaining the shift in sensory processing that is sensitive to task demands, as the subject intends.

Language does trip us up. It is insanely easy to speak of attention as modulating (causing) activity in V4 or IT or LGN (I don’t believe these claims, but even I can’t shake such language). Metaphors of attention as a cause such as the spotlight have a death grip on attentional theory. Anderson (2011) has a wonderful exercise that is worth trying: when you invoke “attention” see if you can cross it out in your text without loss of explanatory force. Ontologically, in mechanisms of attention, see if you can cross out the placeholder of the spotlight without loss of explanatory value. So, we can remove the spotlight, the arrow just indicating the circuitry, perhaps across large sections of the brain, mediating the interaction between intention and vision. We then get this picture, and I think one might see that nothing is lost.

Indeed, I think this is one interpretation of biased competition as espoused by Desimone and Duncan. I won’t claim that this is how they actually think about the mechanism, but at one point, they said this reasonably clearly:

The approach…differs from the standard view of attention, in which attention functions as a mental spotlight enhancing the processing … of the illuminated item. Instead, the model we develop is that attention is an emergent property of many neural mechanisms working to resolve competition for visual processing and control of behavior (Desimone and Duncan 1995, 194).

I think this is the right view of attention. Attention is not some additional mechanism that had to be built after we gave an agent capacities for intention, for sensory systems, and for response. The proper merging of those systems provided for a state of attentional orientation which is just a way of describing the selectivity of the system when it acts in a specific way. It’s not some additional furniture in the mind, but a way the mind comes to be in action (this goes back to our sufficient condition).

Quilty-Dunn seems to me to not engage with this possibility because he is being too accommodating regarding theories of attention, allowing space for the dominant (but I believe mistaken) conception. In his response to Mole, he notes that one might think that “attention simply is perceptual processing” (this isn’t quite Mole’s view, but no matter), but then notes that attention can’t be both identical to perceptual processing and what modulates perceptual processing (see p. 9). This is correct but as an argument against Mole, it fails to engage with Mole’s position or just begs the question, for what is at issue is precisely whether attention is in the business of modulating perception. I agree with Mole that it is not.

Once you eliminate perceptual attention as an intermediary and see it as the result of top-down influences on perceptual processing, then perceptual attention becomes a very good candidate for top-down processing. In a recent article, I make this case by drawing on a specific implementation of biased competition, what is termed divisive normalization. The point is that many measured neuronal effects that suggest differential information processing can be modeled by a process that involves competition between sensory neurons that is resolved by a top-down bias. It is interesting that in most models, that bias is labeled “attention” but as we saw, there are reasons to deny this. Again, that is just the spotlight model. Whatever that bias is, everyone agrees that it comes from task sets (intentions), so the circuits that are envisioned then can precisely be circuits for cognitive penetration (see (Reynolds and Heeger 2009) (Lee and Maunsell 2009). This is clear when one replaces “attention” with “intention”; see (Wu Forthcoming)).

A lot might then seem to hang on “direct” and while a requirement that intention “directly” influences vision seems intuitive, it also seems to me one of those philosophical problems that won’t get science very far. Assume that a population of neurons is what realizes an intention and that their influence is separated from the relevant part of the visual system by three synapses. Does that mean the influence of intention on vision is indirect? Perhaps one way to address this is to ask whether the linking neurons themselves realize other psychological states. If they do not, if they merely transmit the causal influence between the realizers of intention and perception, then the influence is psychologically direct though not neutrally direct. I don’t think this issue can be settled a priori, however. It has an important empirical element, and this is why I speak of an empirical case of cognitive penetration. Still, the issues clearly have an important philosophical element.

I don’t expect to resolve this issue here but I want to draw a moral. If attention is going to be important in our thinking about any aspect of mind, then we need to resolve the metaphysics of attention. Humbly, I suggest that the two best worked out views of the metaphysics of attention, for all their differences, both focus on tasks/action and deny that attention is a spotlight (this is Mole’s view and my own; I should note that Sebastian Watzl has recently published a book on attention I have not yet examined). Attention is at its core something that is tied to helping us deal more effectively with things. I strongly suspect that all relevant notions of attention are descriptions of that basic fact.

References

Allport, A. 1993. “Attention and Control: Have We Been Asking the Wrong Questions? A Critical Review of Twenty-Five Years.” In Attention and Performance XIV (Silver Jubilee Volume): Synergies in Experimental Psychology, Artificial Intelligence, and Cognitive Neuroscience, 183–218. Cambridge, MA: MIT Press.

Anderson, Britt. 2011. “There Is No Such Thing as Attention.” Frontiers in Theoretical and Philosophical Psychology 2: 246. doi:10.3389/fpsyg.2011.00246.

Corbetta, Maurizio, and Gordon L. Shulman. 2011. “Spatial Neglect and Attention Networks.” Annual Review of Neuroscience 34: 569–99. doi:10.1146/annurev-neuro-061010-113731.

Desimone, Robert, and John Duncan. 1995. “Neural Mechanisms of Selective Visual Attention.” Annual Review of Neuroscience 18: 193–222. doi:10.1146/annurev.ne.18.030195.001205.

Krauzlis, Richard J., Lee P. Lovejoy, and Alexandre Zénon. 2013. “Superior Colliculus and Visual Spatial Attention.” Annual Review of Neuroscience 36 (July): 165–82. doi:10.1146/annurev-neuro-062012-170249.

Lee, Joonyeol, and John H. R. Maunsell. 2009. “A Normalization Model of Attentional Modulation of Single Unit Responses.” PLoS ONE 4 (2): e4651. doi:10.1371/journal.pone.0004651.

Mole, Christopher. 2013. Attention Is Cognitive Unison: An Essay in Philosophical Psychology. OUP USA.

Petersen, Steven E., and Michael I. Posner. 2012. “The Attention System of the Human Brain: 20 Years After.” Annual Review of Neuroscience 35 (1): 73–89. doi:10.1146/annurev-neuro-062111-150525.

Posner, Michael I., and Steven E. Petersen. 1990. “The Attention System of the Human Brain.” Annual Review of Neuroscience 13 (1): 25–42. doi:10.1146/annurev.ne.13.030190.000325.

Prinz, Jesse. 2012. The Conscious Brain. Oxford: Oxford University Press.

Reynolds, John H., and David J. Heeger. 2009. “The Normalization Model of Attention.” Neuron 61 (2): 168–85. doi:10.1016/j.neuron.2009.01.002.

Wu, Wayne. Forthcoming. “Shaking the Mind’s Ground Floor: The Cognitive Penetration of Visual Attention.” The Journal of Philosophy.